How to download an entire website: several methods

Table of Contents

When you need to download an entire website (not just a webpage) for offline use, you can use one of these CLI and GUI applications.

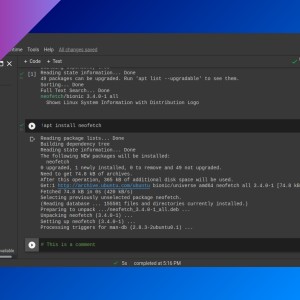

wget (CLI)

Yes, you can also use wget to download a website, not only standalone files. Use the parameters -r (recursive) and -l <level> (recursive level).

wget -r -l 1 http://example.comThis will create a folder inside your working directory with all the files. -l 1 follows one level of links.

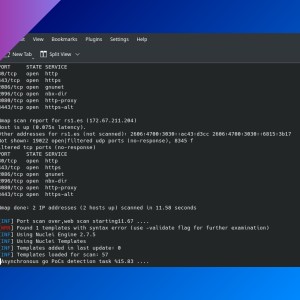

HTTrack (CLI & GUI)

HTTrack comes with a command line tool and a web-based interface to download websites. CLI tool is very easy to use (-r <level> parameters restricts the recursive download):

httrack -r 2 http://example.com- We use

-r 2to download one level of recursion.-r 1only downloads one webpage, does not follow links.

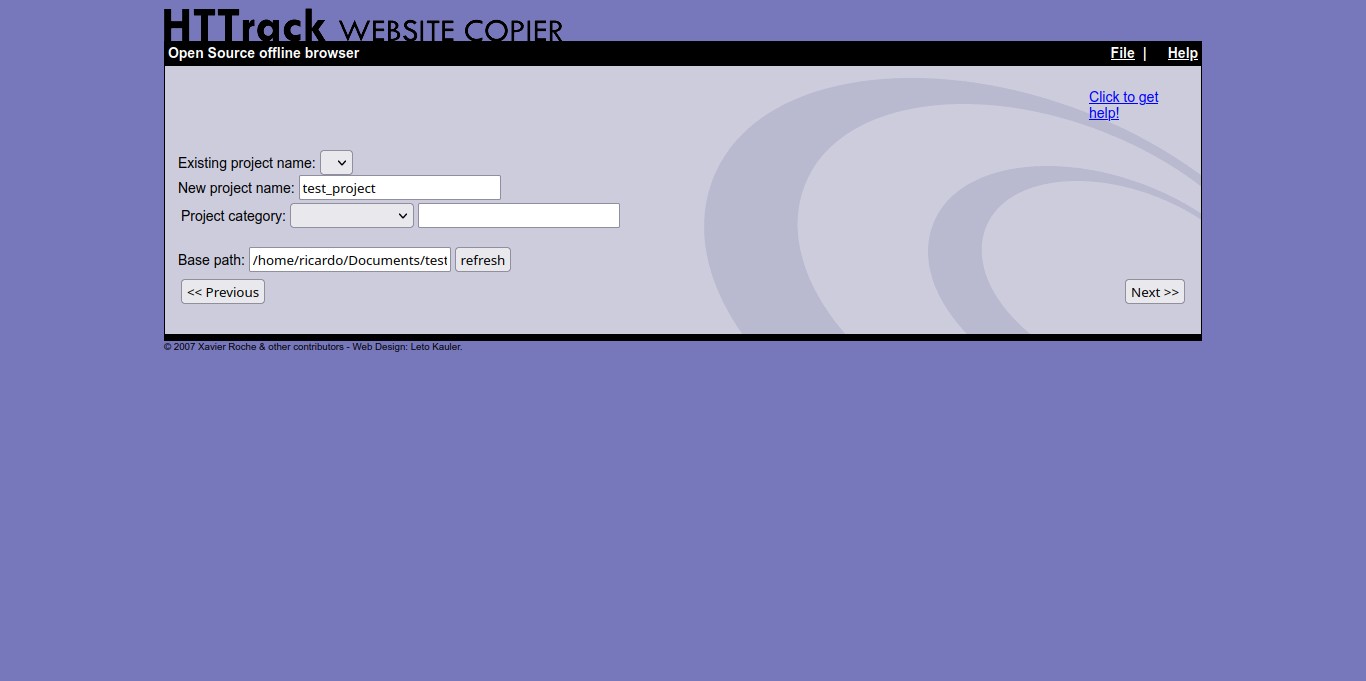

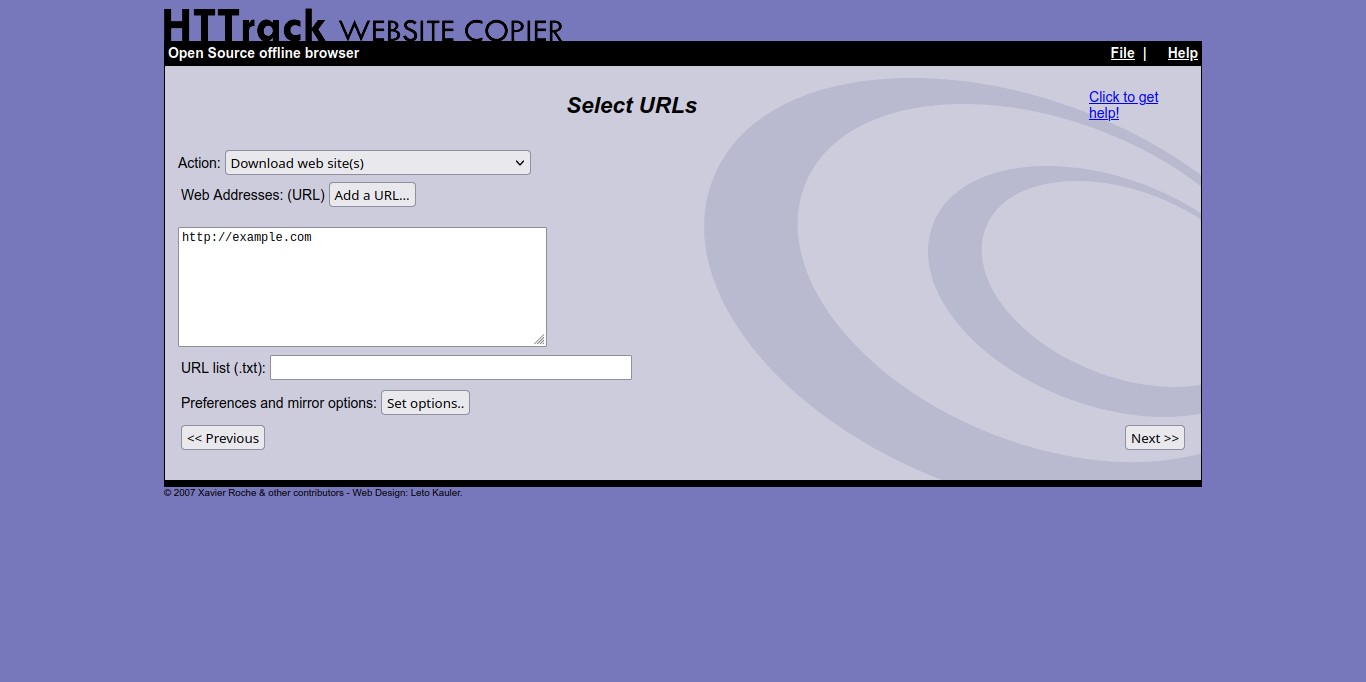

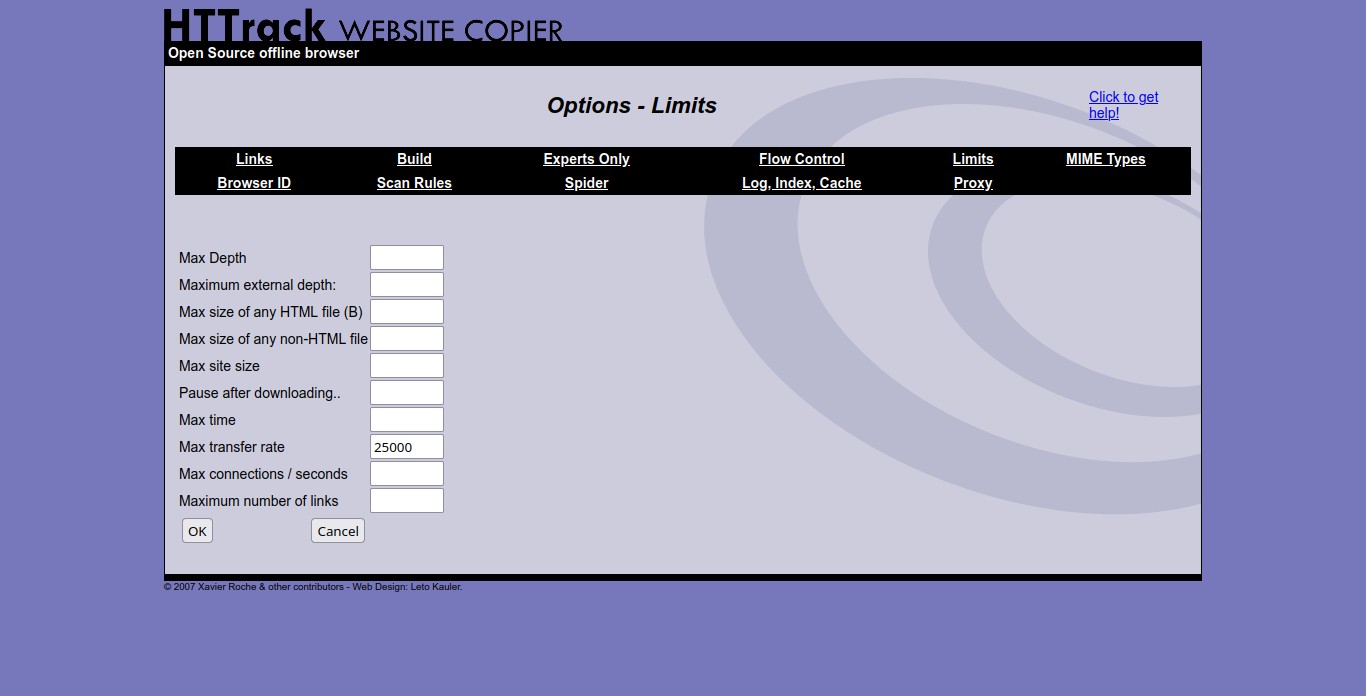

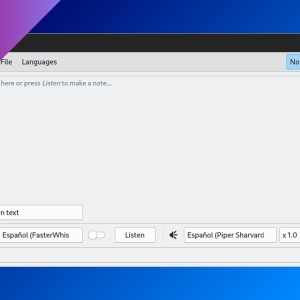

Web interface is called “WebHTTrack Website Copier” and you can access it using your system Program Menu. Then, just follow the steps, is very straightforward.

If you have any suggestion, feel free to contact me via social media or email.

Latest tutorials and articles:

Featured content: