Finding duplicated files: several command-line methods

Table of Contents

You can find duplicated files based on their content using the Terminal. These are some of the ways to achieve it.

For GUI apps, check Finding duplicated files (II): GUI apps.

Using find, sort and uniq

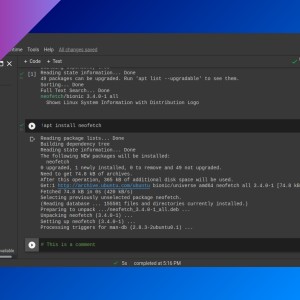

find . -type f -exec md5sum {} \; | sort | uniq -w32 -dD- This will use

findto search for files (-type f) in the current directory and generate a MD5 checksum for each (-exec md5sum {} \;). Then, it will sort the output by the MD5 checksum (sort) and it will rununiqto search for the duplicated (-dD) MD5 checksum (-w32: look only the first 32 characters, that corresponds with the MD5 checksum). - If you use another hash function, you’ll need to change the

uniqcommand.

find . -type f -exec sha1sum {} \; | sort | uniq -w40 -dDfdupes

fdupes -r .- This will search for duplicates recursively (

-r), based on file sizes and MD5 signatures.

More options:

-t: show modified time.-d: interactive delete, choose what files to preserve.

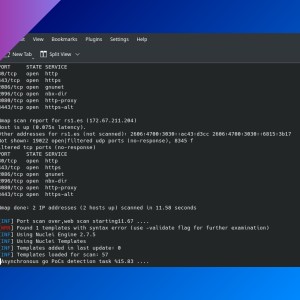

Finding duplicated file names (with the same or different content)

find . -type f | awk -F "/" '{print $NF}' | sort | uniq -d | sed 's/\s/\\ /g' | xargs -L 1 find . -name- This will find files (

find . -type f), select their filename (awk -F "/" '{print $NF}'), sort them (sort), select the repeated ones (uniq -d), add a backslash to escape spaces (sed 's/\s/\\ /g') and search the path of those duplicated filenames (xargs -L 1 find . -name).

If you have any suggestion, feel free to contact me via social media or email.

Latest tutorials and articles:

Featured content: